AGI Timelines Keep Moving—Toward Us

Heads up, if you aren't watching this on a week to week basis

Remember the halcyon days of 2021, when AGI was a comfy “sometime after I’m dead” problem? Forecasts floated anywhere from twenty to a hundred years. Nobody lost sleep but except the nerds.

Then the dates started shifting.

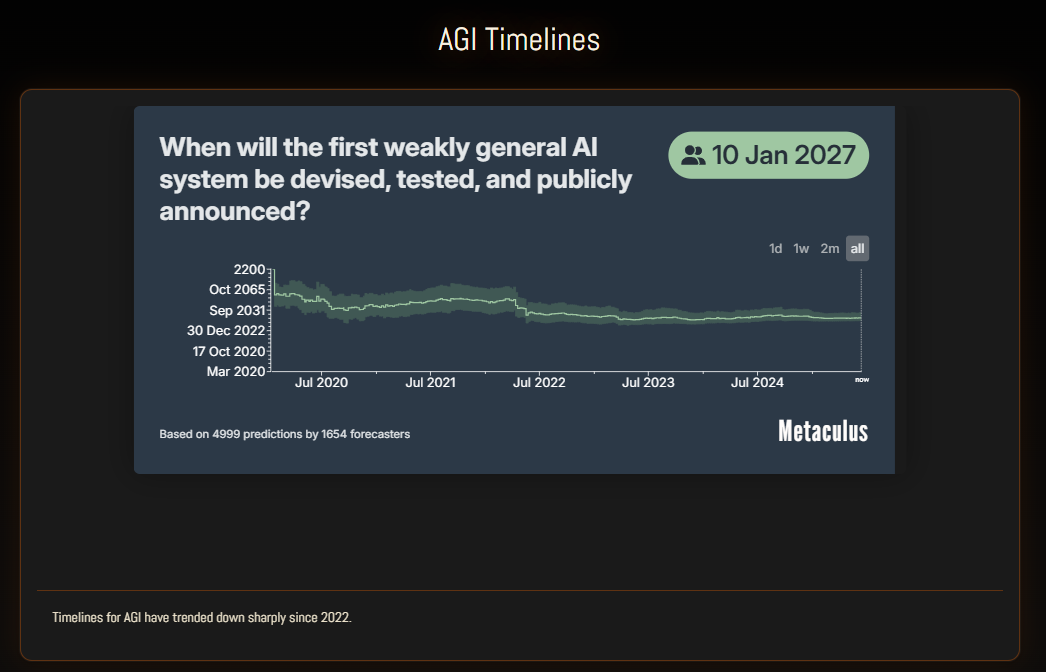

2020: Metaculus—my favorite crowd-forecasting site—pegs “first AGI” at 2052.

Today: Same hive mind says January 2027. That’s 18 months. If that holds you’ll have AGI before you have to change the batteries in your smoke detector.

The cope that “AGI is always five years away” isn’t true. If anything, we keep overshooting. GPT-4 landed faster than most exper

ts guessed; image, video, and music models followed in months (Google “Will Smith eating spaghetti”); o3 kicked off an entirely new scaling paradigm. Deadlines aren’t slipping—they’re collapsing.

Could AGI 2027 still be wrong? Absolutely. But the new window—2 to 10 years—isn’t sci-fi territory. It’s strategic-planning territory. If AGI shows up this decade, governments, companies, and regular humans are going to be wildly unprepared at the rate we are going.

So why do people still wave the topic away? I’m looking at you, Normalcy bias. Our brains are wired for incremental change. We default to “surely not yet” because yesterday looked like today—and today mostly does too… except for the part where your design new hire is now Midjourney.

Here’s the takeaway: stop treating rapid timelines as clickbait. They might be off, but they’re plausible enough to plan against.

Ask yourself what it means for your career, investments, or kids’ education, now before the smoke alarm starts going off.

Realizing that the ai-2027 timeline might be real, I now understand we need to think drastically more about safety. I'm very late to the party, but I'm ready to spend serious time on those discussions now.