Stop Calling Everything AGI

Our language isn’t keeping up with our language models

Ask me in Europe where I live and I say “the USA”. Ask me in Chicago and I say “Boston”. Ask me in Boston and you get “by the Kendall Square T stop.” If I answered the question of where I lived with “the Earth,” you would think I was being a jerk.

The closer you are to something, the more precise your words need to be.

The Three-Bucket Problem

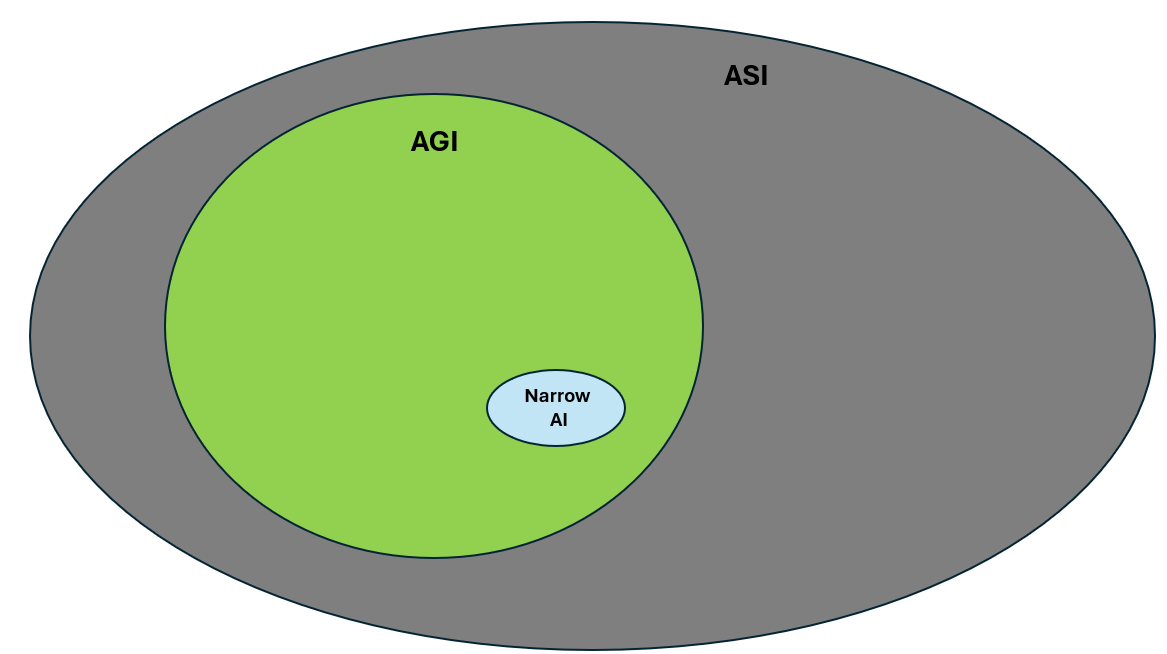

For decades our AI map had three labels:

Narrow AI – great at one task, useless at everything else (think google maps)

AGI – matches humans on nearly every intellectual metric (think Samantha from Her at the beginning of the movie)

ASI – outclasses humans on all dimensions by a large margin (think Samantha from Her at the end of the movie)

In n-dimensional capability-space, we have something like this:

That three-part scheme worked when AGI sat on a fifty-year horizon. But now we are closer and can see finer details.

Today models write code, plan road trips, generate lifelike movies, discover new science, and develop government policy. Everything smarter than a spam filter is subject to the same debate about whether it is “really TRUE AGI” or “just […] on steroids” (l’m looking at you r/singularity). The AGI term is overloaded at this point and it’s tearing at the seams.

The result looks like two drunks in a bar yelling about which quarterback is the GOAT. Same word, zero shared meaning.

Ability versus Skill

François Chollet’s foundational 2019 paper On the Measure of Intelligence that forms the basis for the ARC-AGI benchmark separates intelligence, the ability to learn new skills, from the skills themselves. In his framework, a system can be skilled at an arbitrary number of tasks without being intelligent if it cannot generalize to learn new tasks.

Skill without ability is inherently limited. Ability without skill is useless in practice. Keeping this distinction in mind points to some missing labels that can help clean up our arguments, so we can have new, more interesting arguments.

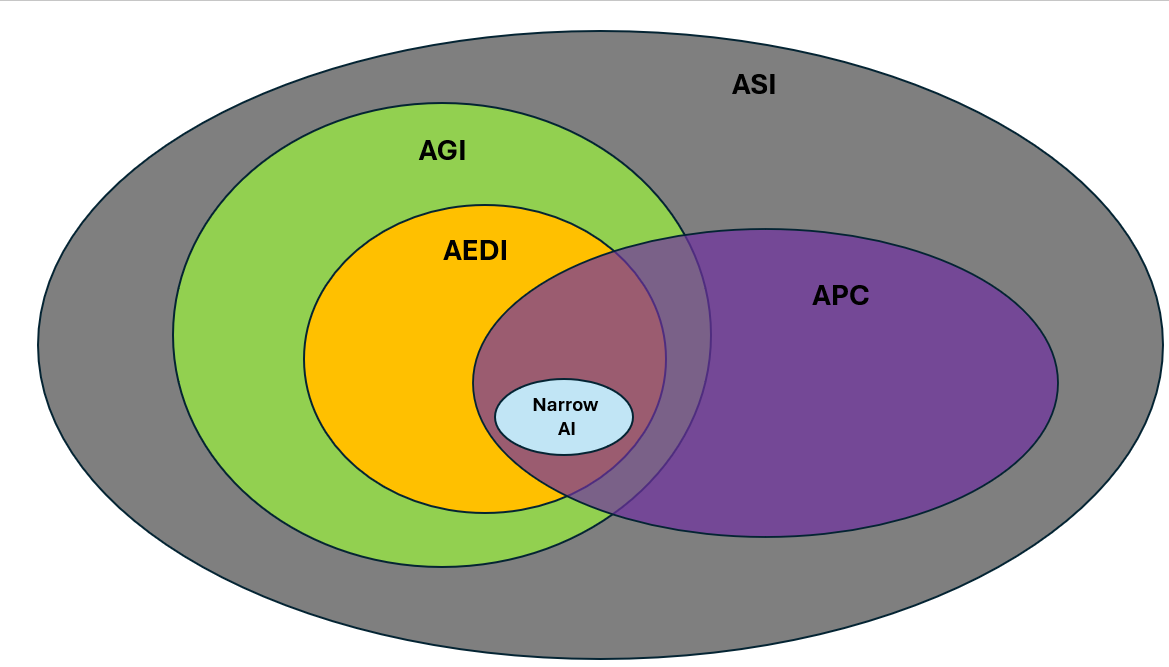

Two terms to fill the gap:

1. APC — Artificial Practical Competence

Definition: A non-human system that can accept a plain-language goal and complete the real-world steps with human-level reliability across many everyday domains.

The focus here is on useful skills rather than raw learning ability, sidestepping the general intelligence debate entirely. Is it “really thinking”, does it have “true understanding”? For the purposes of APC we don’t care. The questions here are “can it schedule my kids summer activities?” and “can it clean my bathroom?”. Achieving APC would change how we do almost everything we currently do, but would not create fundamentally new things as a first order result.

2. AEDI — Artificial Economically Disruptive Intelligence

Definition: A non-human system with the ability to learn and carry out revenue-generating tasks with sufficient speed, breadth, and accuracy to reshape existing markets, labor demand, and price structures across many industries and at a global scale.

AEDI need not exhibit broad human-level cognition. Its intelligence may be confined to a limited set of commercial functions so long as those functions produce significant economic disruption. Compared with an APC system, AEDI can acquire new profit-oriented skills without human intervention or a long lead time. However, along non-economic dimensions (tying shoelaces, for example) it may be less competent than APC.

Here’s what the enhanced n-dimensional capability-space diagram looks like now with the new terms added:

The Upshot

Cramming too many concepts into the term AGI leaves us arguing past one another. Adding APC for broad, practical skill and AEDI for “took our jobs” shock to the taxonomy of AI will bring the debates into clearer focus and let AGI sit at a higher level of intelligence AND competence.

The discussion around AGI is far too binary and frankly comes across as a bit naive. There seems to some expectation that AGI will happen all at once and that it will be clearly recognizable when it does. It is much more plausible that we will continue to add capabilities in an incremental way and AGI, if it ever does occur, will sneak up on us over time.

In the meantime labeling capabilities in a more granular way, as you suggest, makes total sense.